Fostering a Strong Engineering On-Call Culture

When I first joined the on-call team at Button, I had a lot of anxiety about getting paged. What if I slept through a page? What does that service even do? Which dashboard am I supposed to be looking at? Since then, I’ve been on the rotation for almost a year and have responded to countless pages. While I cannot profess that I enjoy being on-call, I can attest that being a member of the on-call rotation has definitely been a highlight of my role at Button — in large part due to the support and culture of our on-call team.

What is On-Call and Why Does Culture Matter?

In operations and engineering, an on-call team is typically responsible for ensuring the reliability and availability of services and applications. There are clear consequences to the business — direct revenue loss and reputation damage — if the on-call team is not set up for success. What’s less visible but just as impactful is the stress and frustrations that an unhealthy on-call culture can breed within an engineering organization. Engineering is as much about maintaining software as it is about building it. It’s difficult to build scalable software if you are constantly worried about production outages!

An outage at 2:00 am can both be damaging to the company if not handled properly and frustrating and alienating for the individual who must get out of bed to address the related page. It is important for a healthy on-call culture to be mindful of both perspectives. In this blog, I will outline some of the Button on-call team’s best practices.

On-Call @ Button

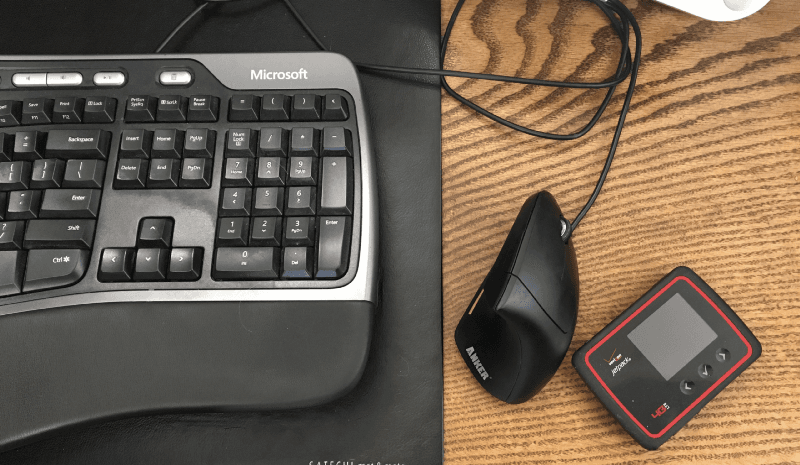

At Button, the product engineers building the services are also responsible for guaranteeing 24/7 uptime for all critical services. To do this, we maintain a weekly on-call rotation that starts at noon on Fridays, when we have a physical handoff to swap WiFi hotspots. There are always two people on-call — a primary and a secondary. If the primary is unresponsive or unavailable when they receive the initial page triggered by an outage, the page is escalated to the secondary. The engineering on-call team also has a business team counterpart that handles partner facing escalations and external communication regarding outages.

Last but not least, being on-call at Button is voluntary. Not all product engineers are on the rotation and they are free to join or leave at any time. Because being on-call is a unique responsibility and often requires unique sacrifice, Button awards every member of the on-call team with a modest monthly bonus as a gesture of appreciation.

What Makes Our On-Call Team Successful?

Below are a few our core philosophies surrounding our on-call culture:

- Having an On-boarding Process

- Setting the Right Expectations

- Single Point Person

- Blameless Postmortems

- Our Alerting Philosophy

- Encouraging Self-Care

The On-boarding Process

Setting up for success starts at the very beginning. We don’t just add people to the on-call rotation and hope for the best. Our on-boarding process is as follows:

- Shadow a current on-call engineer.

- Be primary during work hours for a week and pair on a real escalation.

- Handle an escalation by yourself — we simulate an escalation for a new engineer to triage and resolve.

- Added to the rotation.

Setting the Right Expectations

Handling an escalation doesn’t necessarily mean you ultimately resolve the root cause behind every issue, or even have the expertise to do so.

To quote our Escalations Handbook:

Think of it like a hospital: The ER physicians are there to triage and stabilize the patient, then send the patient onward to more specialized caregivers.

When we get paged, we’re not expected to solve every problem — we’re expected to assess the issue, determine what the impact to the business is, communicate externally via our status page, and mitigate the damage to the best of our ability. Often times, follow-up tasks are needed to ensure that an escalation is fully resolved and doesn’t become a re-occurring incident. We put a great deal of trust in our engineers to make the best judgment call and it takes experience and practice to consistently make the right call during escalations.

Single Point Person

Sometimes high-pressured escalations can be chaotic. There could be many people involved or many systems at play, and it quickly becomes critical to keep track of what changes are in flight and who’s doing what. We abide by the philosophy that at all times a single point person will be in charge of the escalation response.

Our handbook states:

The point person is responsible for all decision-making during an escalation, including making production changes and deciding what and when to communicate with other parties. If you are not the point person, you should not be doing these things unless the point person has explicitly requested and directed you to help.

The point person can change during an escalation; as long as it is explicit (e.g., “The production issue is solved, Barry is going to take point from here.”).

Blameless Postmortems

We strive to learn from our mistakes, and a big part of that is a culture of blameless postmortems.

Blameless Postmortems allow us to speak honestly and boldly about what we could have done better and reflect on what we’ve learned. This is the place where engineers advocate for critical infrastructure improvements or changes to existing processes. This provides an opportunity for the rest of the company to weigh in and discuss the business impact and set future expectations.

The postmortem is also the opportunity to dive deep into the root cause of an issue. We believe strongly in having a mystery free prod — if our production system behaved in an unexpected way, we want to know why.

Our Alerting Philosophy

We use Prometheus as part of our monitoring stack. Alerting rules are committed to Github & peer reviewed. Alertmanager sends alerts to PagerDuty, email and Slack. We have 3 different types of alerts:

- Non-paging alerts: send alerts only to email and Slack

- Day-time paging alerts: send alerts to PagerDuty during day time hours but otherwise only send to email and Slack

- Paging alerts: send alerts to PagerDuty, email and Slack at all times.

Any engineer on the team is empowered to add or remove an alert but all alerts should be actionable and must have a playbook. Our playbooks are meant to be brief and describe the specific actions that can be taken when an alert is triggered. Each playbook entry contains a brief description of the business impact, helpful bash commands, and links to appropriate dashboards. The goal is for any engineer, regardless of how much context they have, to be able to come in and quickly triage an issue using the information in the playbook.

To track if our alerts are truly actionable, we borrowed some concepts from data science and measure the precision and recallof our alerts. In this case, precision refers to the percent of alerts fired that were actionable and recall refers to the percentage of actionable incidents that our alerts notified us for. When precision is low, we evaluate if specific alerts should be disabled or modified to trigger on a lower threshold. When recall is low, we try to see if there’s a better signal to measure the actual symptoms that we should be responding to.

Encouraging Self-Care

Whether it is being paged at night or having to reschedule a dinner, on-call can be disruptive to your personal life. However, when set up properly, on-call should not be an undue burden on any individual. Below are a few ways the on-call team at Button ensures that our engineers don’t get burnt out:

- At the end of the week, we strive to leave the rotation better off for the next person. If there are open issues, we close them out or hand them to the product team owners.

- We maintain an on-call history so if an escalation resurfaces, you can see how a previous engineer handled and resolved it.

- While handling an escalation, our on-call engineers use Slack to communicate the play-by-play of their decisions and actions so others can follow along.

- Our on-call schedule is flexible. If someone needs to take a few hours off, we slack our ops channel to see if anyone is available to substitute. If primary gets paged at night, we encouraged them to swap out the next day so they can get the rest they deserve.

- We are encouraged and equipped to ask for help both during and outside of work hours. Nothing is more frustrating then getting a page where you simply don’t know where to even start. We know that it’s always better to ask for help than to act on the wrong assumptions. We store our on-call team’s contact info in PagerDuty so that if an emergency arises outside of work hours, we are empowered to reach the relevant people.

Support Each Other

Button is now at the size where it’s not reasonable to expect every engineer to know how every service works. Not only do our on-call engineers come from different engineering disciplines but they also work on different product teams at Button. Not everyone is a DevOps expert and certainly not everyone is familiar with every piece of software in our system. However, we support each other as a team and help each other grow our strengths, overcome challenges, and learn new skills. The experiences I’ve gained while on-call has taught me how to design and build better software, how to communicate clearly, and how to be a more empathetic teammate.

Original post published here.